PermalinkDocumentation for aws ci/cd pipeline using Aws service.

PermalinkServices Used and Count

| Service | Nos |

| Cloudwatch Event | 1 |

| Lambda Fucntions | 5 |

| Ec2 Image Builder | 2 |

| CodePipeLine | 2 |

| CodeCommit | 2 |

| CodeDeploy | 2 |

| Sns | 3 |

| Ec2 Instance | 3 |

| LoadBalancer | 1 |

| Launch Template | 2 |

| Auto ScalingGroup | 1 |

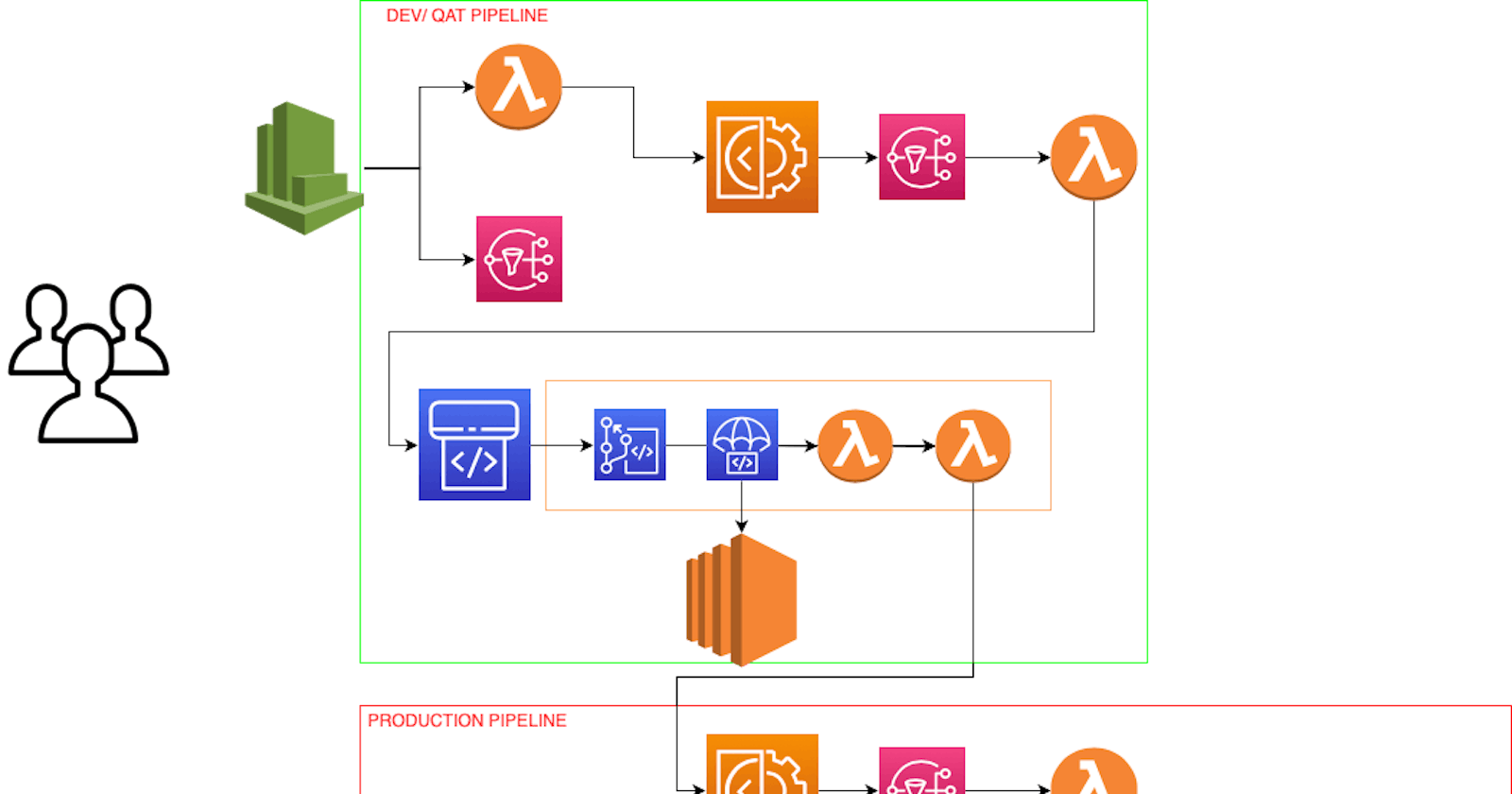

Here the process is divided into two pipeline. One pipeline is called QAT and other one is Production. Both the pipline have codecommit attached to the same repo and master branch.

PermalinkCustom settings created for this project

Both the pipeline is executed using Lambda Fucntions, ie pipeline won't start on each code change.

Here more robust approach of deployment is taken with the creating AMI on each code push and deploying the instance with the latest AMI using Blue/ Green deployment strategy.

The architecture diagram is self explainatory

PermalinkSteps for the Infrastructure Setup

create a codecommit repository with branch on master

create an application in codedeploy

create a deployment group under the codedeploy application.

In Deployment group we specify how our deployment needs to be whether it should be blue/ green or in-place deployment.

For QAT we can go for in-place strategy and for production deployment group we can go for blue/ green deployment with one at a time method.

Here we are creating the two application one for QAT and other for Production. So both the process will be independent with each other.

Now, lets create two Image Builder pipeline for QAT and production.

Pipeline setup is straight forward, main section creating receipe at the initial stage where we can specify all the customisation we need as a component under recipe section, also we can attach aws prebuild receipes to enhance the securty feature of the AMI being generated by the pipeline.

Next we need to customise is Infrastructure stage where we can specify the SNS topic which will receive the message once the IAM build is completed.

On the last stage "Distribtion Stage" we can specify the launch template where the new AMI needs to placed and update it as default version.

This same for prodcution only change is at SNS topic and Launch template.

So Image Builder Pipeline part is done.

PermalinkLambda Fucntions

We have total of 5 lambda fucntion in which two fucntion is to execute/ start the image builder pipeline and 2 for to execute the codepipeline.

One Lambda Fucntion is attached to QAT codepipeline which is checks for the users approval done on the approval statge and if both the users are different then only it will allow to the next codepipeline stage.

First Lambda fucntion is triggered when there is a code push an even is triggered and lambda fucntion execute the QAT Image builder pipeline.

Once the QAT Image builder pipeline completes an SNS notification is send to the subscrbiers in which one is lambda fucntion which executes the QAT codepipeline.

Inside the QAT codepipeline, codedeploy updates the instance using the latest launch template.

On next stage a mail to QC team is send for approval, once it is approved an email to PMO is send which then need PMO approval.

Once the approval from both the parties is received our lambda fucntion checks and confirms if both the user is different.

Here the both user have different IAM role with custom policy.

QC user will only have permission to list the respective pipeline and approval permission at the specific stage only. This is done using

{

"Version": "2012-10-17",

"Statement": [

{

"Action": [

"codepipeline:ListPipelines"

],

"Resource": [

"*"

],

"Effect": "Allow"

},

{

"Action": [

"codepipeline:GetPipeline",

"codepipeline:GetPipelineState",

"codepipeline:GetPipelineExecution"

],

"Resource": [

"arn:aws:codepipeline:us-east-1:666666:DjangoPIpeLine"

],

"Effect": "Allow"

},

{

"Action": [

"codepipeline:PutApprovalResult"

],

"Effect": "Allow",

"Resource": [

"arn:aws:codepipeline:us-east-1:6666:DjangoPIpeLine/ProdcutionApproval/Approval-QT"

Once this stage is passed the lambda fucntion to execute the production Image Builder pipeline is executed.

import json

import boto3

image_pipeline = boto3.client("imagebuilder")

pipeline = boto3.client("codepipeline")

def lambda_handler(event, context):

# TODO implement

image_build = image_pipeline.start_image_pipeline_execution(

imagePipelineArn="arn:aws:imagebuilder:us-east-1:66666:image-pipeline/prod-imagebuilder-pipeline"

)

response = pipeline.put_job_success_result(

jobId=event['CodePipeline.job']['id']

)

return {

'statusCode': 200,

'body': json.dumps("image_build")

}

Role

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"imagebuilder:GetImagePipeline",

"imagebuilder:StartImagePipelineExecution"

],

"Resource": "arn:aws:imagebuilder:us-east-1:66666:image-pipeline/qat-aws-well-architecht-imagebuilderpipe"

}

]

}

Once Production image builder pipeline is completed it send a message to SNS topic which then triggers the Lmabda fucntion to execute the codepipeline.

In production code pipeline there will only two stage source stage which is codecommit and codedeploy stage, as we don't have anything to build.

Here the codedeploy will be doing a blue green deployment.

Important file to present in the code is that the appspec.yml which is the file that codedeploy checks during the deployment process.

It can be for anything simple to sophisicated.

So once the production pipeline gets completed we will be having new two instance under the ASG using the latest AMI.

PermalinkPre requisite

For better safety and to avoid lots of changes. You can initialy setup an instance with working code and requirements and take the AMI.

Harcode in launch template to add codedeploy role to the instances created from the launch template

For production you can set ASG to launch the instances in private network

Allow codedeploy to the loadbalancer, and add the loadbalancer SG to the instances SG so that ccodedeploy can connect to the instances in private network